Pixy2 Quick Links

Basics

Connecting Pixy2 to…

Pixy2 Modes / Programs

Software and Support

Basics

Connecting Pixy2 to…

Pixy2 Modes / Programs

Software and Support

This page describes the LEGO Pixy2 block, which is used in the LEGO Mindstorms EV3 Software. Check out the Pixy2 LEGO Quick Start page for info on how to install the Pixy2 module in Mindstorms.

The Pixy2 block has three main modes of operation:

You can bring up the Pixy2 block from the Sensor palette in the EV3 Mindstorms environment:

Pixy2 uses a color-based filtering algorithm to detect objects called the Color Connected Components (CCC) algorithm. Color-based filtering methods are popular because they are fast, efficient, and relatively robust. Most of us are familiar with RGB (red, green, and blue) to represent colors. Pixy2 calculates the color (hue) and saturation of each RGB pixel from the image sensor and uses these as the primary filtering parameters. The hue of an object remains largely unchanged with changes in lighting and exposure. Changes in lighting and exposure can have a frustrating effect on color filtering algorithms, causing them to break. Pixy2’s filtering algorithm is robust when it comes to lighting and exposure changes. Additionally, Pixy2 uses a connected components algorithm to determine where one object begins and another ends. Pixy2 then compiles the sizes and locations of each object and reports the largest object to your LEGO program.

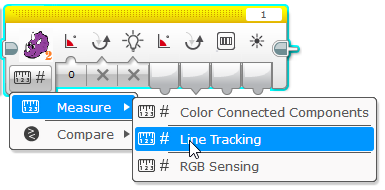

Selecting color connected components mode is done by clicking on Pixy2's mode selector and then selecting Measure➜Color connected components.

Refer to the Pixy2 LEGO Quickstart, which describes how to run a simple CCC demo.

The Pixy2 CCC block is shown below, with labels showing the different inputs and outputs.

Color connected components mode has two sub-modes of operation: General and Signature.

General Sub-mode is selected by setting a 0 for the Signature input. (The Signature input is indicated by the number 3 in the block picture above.) In General Sub-mode, Pixy2 will report the largest object that it has detected, regardless of which signature or color code describes the object. And the Signature Output tells you the signature or the color code number of the object.

As a simple (somewhat contrived!) example, if you wanted to detect both green and purple dinosaurs, you could train signature 1 on the purple dinosaur and signature 2 on the green dinosaur. General Sub-mode will then tell you if either types of dinosaurs are present. The Signature Output will be 1 if a purple dinosaur is present and is larger in the image than other green dinosaurs in the image. The Signature Output will be 2 if the if a green dinosaur is present and is larger in the image than other purple dinosaurs in the image. In either case, the X, Y, Width and Height outputs will report the location and size of the largest detected dinosaur. Note, that the dinosaurs may be the same physical size, but because one dinosaur is closer to the camera, it will appear larger in the image, and it will be the object that is reported.

In Signature sub-mode, Pixy2 will report the largest object that matches the signature number or color code set in the Signature input (3 in the block diagram above). And the Signature Output tells you how many objects Pixy2 has detected that match the specified signature or color code.

Similar to the example above, if you had two types of dinosaurs, purple and green, and you trained signature 1 on the purple dinosaur and signature 2 on the green dinosaur, you could restrict detection to only purple dinosaurs by setting the Signature input to 1. And similarly, you could restrict detection to only green dinosaurs by setting the Signature input to 2.

Note that the Signature input can be connected to another output within your program, so you can change the detected signature or color code number during runtime, and Pixy2 will change what it reports accordingly.

Pixy2 has the ability to detect and track lines. Line-following is a popular robotics demo/application because it is relatively simple to implement and gives a robot simple navigation abilities. Most line-following robots use discrete photosensors to distinguish between the line and the background. This method can be effective, but it tends to work best with only thick lines, and the sensing is localized making it difficult for the robot to predict the direction of the line or deal with intersections.

Pixy2 attempts to solve the more general problem of line-following by using its image (array) sensor. When driving a car, your eyes take in lots of information about the road, the direction of the road (is there a sharp curve coming up?) and if there is an intersection ahead. This information is important! Similarly, each of Pixy2's camera frames takes in information about the line being followed, its direction, other lines, and any intersections that these lines may form. Pixy2's algorithms take care of the rest. Pixy2 can also read simple barcodes, which can inform your robot what it should do – turn left, turn right, slow down, etc. Pixy2 does all of this at 60 frames-per-second.

Selecting Line Tracking mode is done by clicking on Pixy2's mode selector and then selecting Measure➜Line Tracking.

Refer to the LEGO Line Tracking Test, which describes how to run a simple Line Tracking demo.

The Pixy2 Line Tracking block is shown below, with labels showing the different inputs and outputs. Please refer to the Line Tracking Overview for a description of the terms used below like Vector, barcode and intersection.

Upon encountering an intersection, Pixy2 will find the path (branch) in the intersection that matches the turn angle most closely and the Vector will then become that branch. Turn angles are specified in degrees, with 0 being straight ahead, left being 90 and right being -90 (for example), although any valid angle value can be used. Valid angles are between -180 and 180.

For example, if you wanted your robot to always turn left at intersections, you could set the Turn Angle to 90. Or you could set the Turn Angle programmatically, based on what your program decides. The Turn Angle is 0 by default, which corresponds to the straightest path upon encountering an intersection.

We noticed that several Pixy1 users were using Pixy to just sense color values, but using the color connected components algorithm to do so. To make these kinds of color sensing applications easier (we're talking to you, Rubik's cube solvers!), we've added the “RGB sensing” functionality, which allows your LEGO program to retrieve the RGB values of any pixel in Pixy2's image.

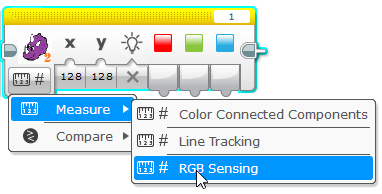

Selecting RGB sensing mode is done by clicking on Pixy2's mode selector and then selecting Measure➜RGB Sensing.

Refer to the LEGO RGB Sensing Test, which describes how to run a simple RGB sensing demo.

The Pixy2 RGB sensing is shown below, with labels showing the different inputs and outputs.

There are several ways to use conditions based on what Pixy2 detects in your program. Below we describe the different blocks that use “Pixy2 conditionals”.

This block available by selecting Compare in the Mode Selector as shown below:

Here is what the Compare Signature block looks like:

Compare Signature mode compares the number of detected objects to the Threshold Value (3) using the selected Compare Type (2). The True/False result is output in Compare Result (4), and the number of signatures is output in Number of Blocks (5) output.

These blocks is available through the Wait block, which is one of the Flow Control blocks. There are two options available: Wait Signature Compare and Wait Signature Change.

This block is available by selecting Compare then Signature as shown below:

Here is what the Wait Signature Compare block looks like:

The Wait Signature Compare block compares the number of detected objects to the Threshold Value (3) using the selected Compare Type (2). If the result is false, the program will be paused. If the result is true, the program will resume execution, and the number of detected objects is output in Number of Blocks (4).

This block is available by selecting Change then Signature as shown below:

Here is what the Wait Signature Change block looks like:

The Wait Change Signature block waits for a change in the number of specified objects detected by Pixy2. If the number of objects that match the signature specified in the Signature input (1) changes by the number indicated in the Amount (2) input, the block returns, and the number of detected objects is output in Number of Blocks (3).

This block is available through the Loop block, which is one of the Flow Control blocks. Bring it up by selecting Pixy Camera then Signature in the Loop block Mode Selection.

Here is what the Loop Pixy2 Compare block looks like:

The Loop Pixy2 Compare block compares the number of detected objects to the Threshold Value (3) using the selected Compare Type (2). If the result is false, the loop will be continue looping. If the result is true, the loop will exit.

This block is available by selecting the Switch block which is one of the Flow Control blocks. Bring it up by selecting Pixy2 Camera, the Compare then Signature in the Switch block Mode Selection.

Here is what the Switch Pixy2 Compare block looks like:

The Switch Pixy2 Compare block compares the number of detected objects to the Threshold Value (3) using the selected Compare Type (2). If the result is false, the false case is executed. If the result is true, the true case is executed.